Exploring the Power of Few-shot Learning with GPT-3: Understanding its Applications and Limitations

"Exploring the Power of Few-shot Learning with GPT-3: Understanding its Applications and Limitations"

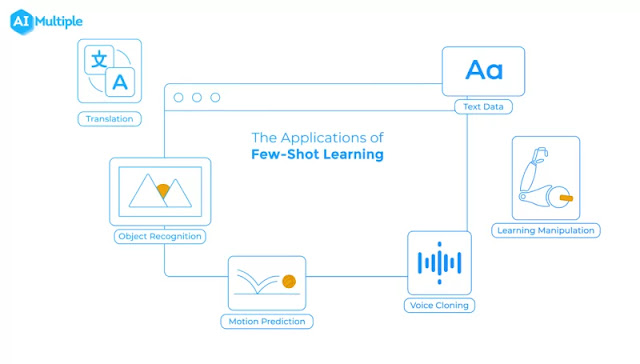

Image credit:-AI MultipleIntroduction:

Few-shot learning is a growing trend in the field of Artificial Intelligence that involves training machine learning models to perform well on tasks with limited data. It has gained significant attention in recent years, with the advancement of technologies such as Generative Pretrained Transformer 3 (GPT-3). In this blog, we'll explore the concept of few-shot learning and understand how GPT-3 has revolutionized this field.

What is Few-shot Learning?

Few-shot learning is a type of machine learning that involves training models to perform well on tasks with limited data. The goal is to train models to learn from a few examples and then generalize to new tasks. This is particularly useful in situations where there is a shortage of data or when the cost of acquiring more data is too high.

Applications of Few-shot Learning:

Few-shot learning has a wide range of applications across various domains, including computer vision, natural language processing, and speech recognition. For example, in computer vision, few-shot learning can be used to train models to recognize new objects with just a few examples. In natural language processing, it can be used to train models to perform text classification tasks, such as sentiment analysis, with a limited amount of data.

How GPT-3 has Revolutionized Few-shot Learning:

GPT-3, developed by OpenAI, is one of the most advanced and sophisticated AI models in the world. It is a Generative Pretrained Transformer that uses deep learning to generate human-like text. One of the key features of GPT-3 is its ability to perform few-shot learning with remarkable accuracy. It can generate new text or complete incomplete sentences based on just a few examples, making it a powerful tool in the field of few-shot learning.

Advantages of using GPT-3 for Few-shot Learning:

There are several advantages of using GPT-3 for few-shot learning, including its ability to generate high-quality text, its ability to perform well on tasks with limited data, and its ability to generalize to new tasks. Additionally, GPT-3 is highly scalable and can be trained on massive amounts of data, which helps it perform well on a wide range of tasks.

Limitations of GPT-3 for Few-shot Learning:

While GPT-3 is a powerful tool for few-shot learning, there are also limitations to consider. For example, GPT-3 can be biased towards certain biases or perspectives and may generate text that is not entirely accurate or appropriate. Additionally, GPT-3 is a complex model that requires a significant amount of computing resources and technical expertise to use effectively.

Conclusion:Few-shot learning is an important and rapidly growing trend in the field of Artificial Intelligence. GPT-3, developed by OpenAI, has revolutionized the field of few-shot learning with its advanced deep learning capabilities and ability to perform well on tasks with limited data. However, it is important to consider the limitations of GPT-3 and to use it responsibly to avoid potential biases and inaccuracies. In conclusion, few-shot learning with GPT-3 is a powerful tool that has the potential to transform many industries and fields, but it should be used with caution and careful consideration.

Comments

Post a Comment